PQA: Perceptual Question Answering

Yonggang Qi 1* Kai Zhang1* Aneeshan Sain2 Yi-Zhe Song2

1Beijing University of Posts and Telecommunications, CN 2SketchX, CVSSP, University of Surrey, UK

CVPR 2021

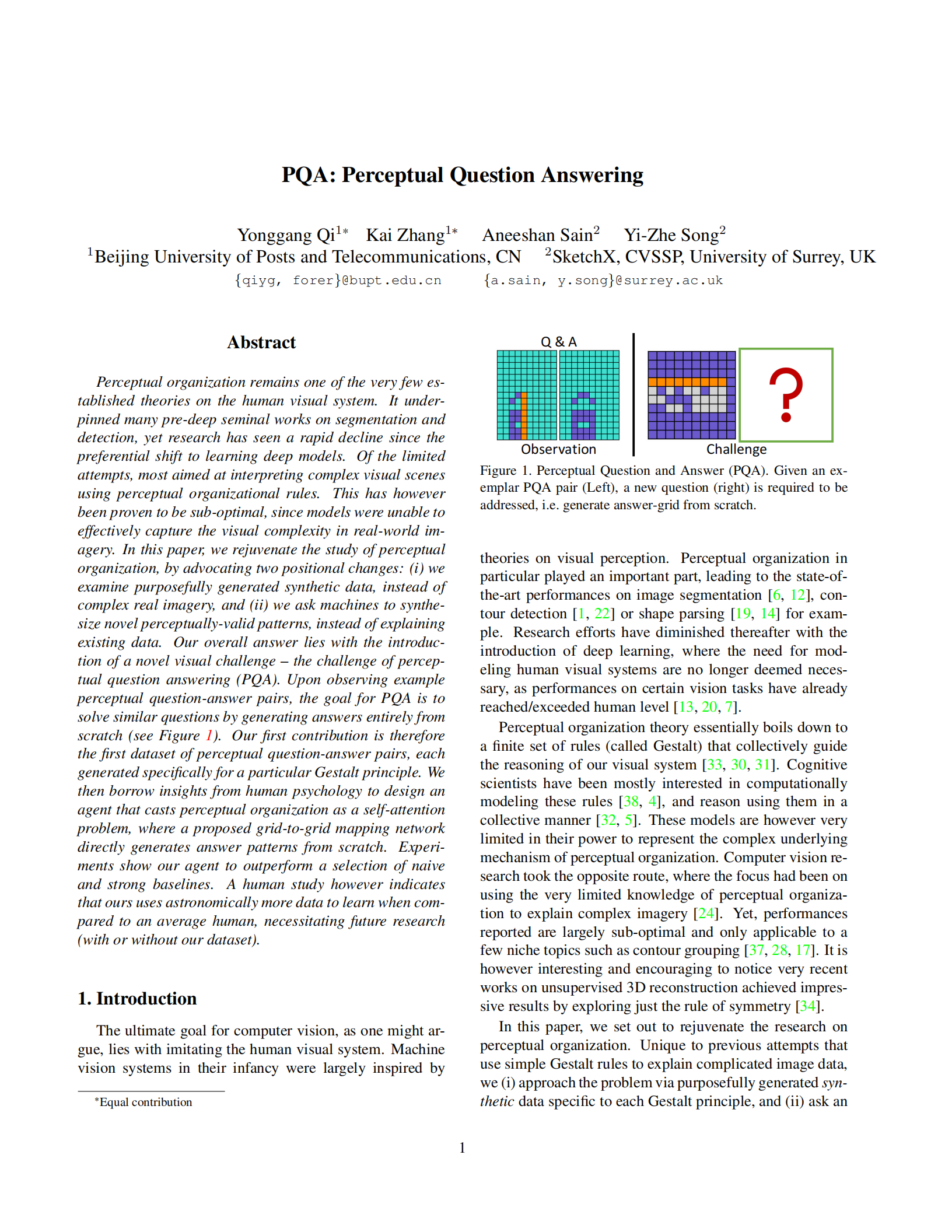

Figure 1. Perceptual Question and Answer (PQA). Given an ex-emplar PQA pair (Left), a new question (right) is required to be addressed, i.e. generate answer-grid from scratch.

Introduction

Perceptual organization remains one of the very few established theories on the human visual system. It underpinned many pre-deep seminal works on segmentation and detection, yet research has seen a rapid decline since the preferential shift to learning deep models. Of the limited attempts, most aimed at interpreting complex visual scenes using perceptual organizational rules. This has however been proven to be sub-optimal, since models were unable to effectively capture the visual complexity in real-world imagery. In this paper, we rejuvenate the study of perceptual organization, by advocating two positional changes: (i) we examine purposefully generated synthetic data, instead of complex real imagery, and (ii) we ask machines to synthesize novel perceptually-valid patterns, instead of explaining existing data. Our overall answer lies with the introduction of a novel visual challenge -- the challenge of perceptual question answering (PQA). Upon observing example perceptual question-answer pairs, the goal for PQA is to solve similar questions by generating answers entirely from scratch (see Figure 1). Our first contribution is therefore the first dataset of perceptual question-answer pairs, each generated specifically for a particular Gestalt principle. We then borrow insights from human psychology to design an agent that casts perceptual organization as a self-attention problem, where a proposed grid-to-grid mapping network directly generates answer patterns from scratch. Experiments show our agent to outperform a selection of naive and strong baselines.

PQA Challenge

Dataset Preview

Figure 2. PQA Dataset. Each row from (a) to (g) corresponds to a specific Gestalt law, and a few examples of PQA pair with question (left) and answer (right) are visualized. Zoom in for better visualization.

Explore More PQA Pairs

We provide visualization of more PQA pairs below. Simply select a task and an index to view.

Raw Data Format

All PQA pairs are stored in JSON file. Each JSON file contains a dictionary with two fields:

- "train": a list of exemplar Q/A pairs.

- "test": a list of test Q/A pairs.

Each "pair" has two fields:

- "input": a question "grid".

- "output": an output "grid".

Each "grid" (width w, height h) is composed of w*h color symbols. Each color symbol is one of 10 pre-defined colors.

Our Solution

Figure 3. Framework overview.

As shown in Figure 3, our proposed network is an encoder-decoder architecture which is tailored from Transformer. In general, the encoder is a stack of N identical layers, and each layer takes inputs from three sources: (i) test question embedding (the first layer) or output of last layer (the other layers), (ii) positional encoding and (iii) context embedding. The decoder then generates an answer-grid by predicting all symbols at every location of the grid. There are three fundamental components in our transformer architecture: (i) A context embedding module based on self-attention mechanism that is used to encode exemplar PA grid pairs which itself is a part of the input fed into the input encoder. This plays a significant role in inferring the implicit Gestalt law and later generalizing onto the new test question. (ii) We extend positional encoding to adapt to 2D grids-case instead of working on 1D sequence-case, as the 2D locations of symbols are of crucial importance for our problem. (iii) As ours is a 2D grid-to-grid mapping problem, the decoder is tailored to predict all symbols in parallel, i.e., all the color grids are produced in one pass to form an answer instead of one output at a time.

Results

For each question, one credit is given only if all the symbols of the generated answer are correct, i.e., error-free criteria, no credit at all otherwise. The accuracy is thus the percentage of absolute correct answers. Results are shown in Table 1.

| Method | T1 | T2 | T3 | T4 | T5 | T6 | T7 | Avg |

|---|---|---|---|---|---|---|---|---|

| ResNet-34 | 79.6 | 17.6 | 17.9 | 85.2 | 0 | 19.6 | 0.1 | 31.4 |

| ResNet-101 | 73.9 | 10.6 | 0.1 | 50.9 | 0 | 1.7 | 0 | 19.6 |

| LSTM | 55.7 | 23.2 | 25.6 | 38.2 | 0 | 7.4 | 2.8 | 21.8 |

| bi-LSTM | 81.9 | 26.6 | 75.6 | 85.9 | 0 | 41.4 | 23.4 | 47.8 |

| Transformer | 16.8 | 11.3 | 87.4 | 0.3 | 0 | 0.1 | 0 | 16.7 |

| TD+H-CNN | 88.8 | 89.8 | 78.8 | 96.4 | 0 | 50.8 | 9.3 | 59.1 |

| Ours | 82.6 | 97.6 | 93.7 | 96.9 | 61.8 | 82.7 | 98.9 | 87.8 |

Table 1. Comparison results (%) of models trained on all tasks.

To further evaluate the training efficiency of each model, we provide different amounts of data for training. We can observe from Figure 4 that the scale of training data significantly affects model's performance. Unlike our model, humans can learn the task-specific rule from very limited examples. This clearly signifies just how unexplored this topic is, and in turn encourages future research to progress towards human-level intelligence.

Figure 4. Testing results on varying training data volume.

Bibtex

If this work is useful for you, please cite it:

@inproceedings{yonggang2021pqa,

title={PQA: Perceptual Question Answering},

author={Yonggang Qi, Kai Zhang, Aneeshan Sain, Yi-Zhe Song},

booktitle={CVPR},

year={2021}

}

Proudly created by Kai Zhang @ BUPT

2021.3

arXiv

arXiv Code

Code